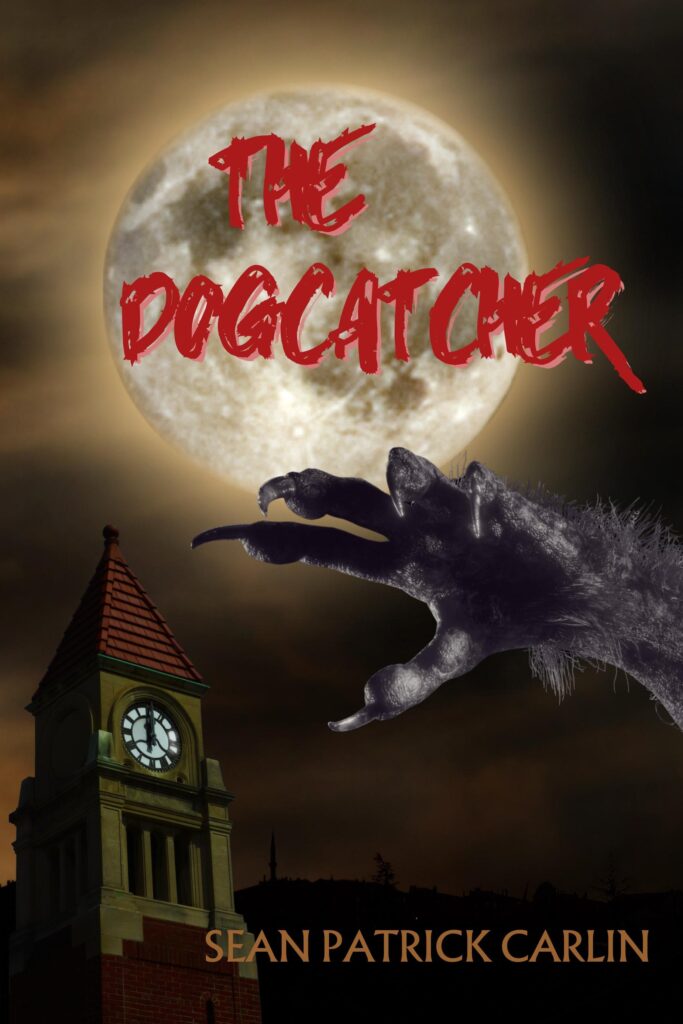

My first novel, The Dogcatcher, is now available from DarkWinter Press. It’s an occult horror/dark comedy about a municipal animal-control officer whose Upstate New York community is being terrorized by a creature in the woods. Here’s a (spoiler-free) behind-the-scenes account of the project’s creative inception and development; how it’s responsible for my being blackballed in Hollywood; how the coronavirus pandemic challenged and ultimately elevated the story’s thematic ambitions; and how these characters hounded my imagination—forgive the pun—for no fewer than fourteen years.

The Dogcatcher is on sale in paperback and Kindle formats via Amazon.

In the spring of 2007, I came home from L.A. for a week to attend my sister’s graduation at Cornell University. My first occasion to sojourn in the Finger Lakes region, I took the opportunity to stay in Downtown Ithaca, tour the Cornell campus, visit Buttermilk Falls State Park. I was completely taken with the area’s scenic beauty and thought it would make the perfect location for a screenplay. Only trouble was, all I had was a setting in search of a story.

CUT TO: TWO YEARS LATER

Binge-watching wasn’t yet an institutionalized practice, but DVD-by-mail was surging, and my wife and I were, as such, working our way through The X-Files (1993–2002) from the beginning. Though I have ethical reservations about Chris Carter’s hugely popular sci-fi series, I admired the creative fecundity of its monster-of-the-week procedural format, which allowed for the protagonists, his-and-her FBI agents Mulder and Scully, to investigate purported attacks by mutants and shapeshifters in every corner of the United States, from bustling cities to backwater burgs: the Jersey Devil in Atlantic City (“The Jersey Devil”); a wolf-creature in Browning, Montana (“Shapes”); a prehistoric plesiosaur in Millikan, Georgia (“Quagmire”); El Chupacabra in Fresno, California (“El Mundo Gira”); the Mothman in Leon County, Florida (“Detour”); a giant praying mantis in Oak Brook, Illinois (“Folie à Deux”); a human bat in Burley, Idaho (“Patience”).

But the very premise of The X-Files stipulated that merely two underfunded federal agents, out of approximately 35,000 at the Bureau, were appropriated to investigate such anomalous urban legends. I wondered: If an average American town found itself bedeviled by a predatory cryptid—in real life, I mean—would the FBI really be the first responders? Doubtful. But who would? The county police? The National Guard? If, say, a sasquatch went on a rampage, which regional public office would be the most well-equipped to deal with it…?

That’s when it occurred to me: Animal Control.

And when I considered all the cultural associations we have with the word dogcatcher—“You couldn’t get elected dogcatcher in this town”—I knew I had my hero: a civil servant who is the butt of everyone’s easy jokes, but whose specialized skills and tools and, ultimately, compassion are what save the day.

But it was, to be sure, a hell of a long road from that moment of inspiration to this:

When the basic concept was first devised, I wrote a 20-page story treatment for an early iteration of The Dogcatcher, dated August 25, 2009. That same summer, I signed with new literary managers, who immediately wanted a summary of all the projects I’d been working on. Among other synopses and screenplays, I sent them the Dogcatcher treatment.

They hated it. They argued against the viability of mixing horror and humor, this despite a long precedent for such an incongruous tonal marriage in commercially successful and culturally influential movies the likes of An American Werewolf in London (1981), Ghostbusters (1984), Gremlins (1984), The Lost Boys (1987), Tremors (1990), Scream (1996), and Shaun of the Dead (2004), to say nothing of then–It Girl Megan Fox’s just-released succubus satire Jennifer’s Body (2009). (I knew better than to cite seventy-year-old antecedents such as The Cat and the Canary and Hold That Ghost; Hollywood execs have little awareness of films that predate their own lifetimes.) I was passionate about The Dogcatcher, but it was only one of several prospective projects I was ready to develop, so, on the advice of my new management, I put it in a drawer and moved on to other things.

Continue reading

Recent Comments